New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-44742][PYTHON][DOCS] Add Spark version drop down to the PySpark doc site #42428

Conversation

This is more like a new page instead of "drop down" |

python/docs/source/index.rst

Outdated

| @@ -24,7 +24,7 @@ PySpark Overview | |||

| **Date**: |today| **Version**: |release| | |||

|

|

|||

| **Useful links**: | |||

| |binder|_ | `GitHub <https://github.com/apache/spark>`_ | `Issues <https://issues.apache.org/jira/projects/SPARK/issues>`_ | |examples|_ | `Community <https://spark.apache.org/community.html>`_ | |||

| |binder|_ | `GitHub <https://github.com/apache/spark>`_ | `Issues <https://issues.apache.org/jira/projects/SPARK/issues>`_ | |examples|_ | `Community <https://spark.apache.org/community.html>`_ | `Historical versions of documentation <history/index.html>`_ | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is there another website showing such a link? I think the wording is a bit too long, given that it is not supposed to be frequently used.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Okay, let me try turning it into a dropdown combobox form.

|

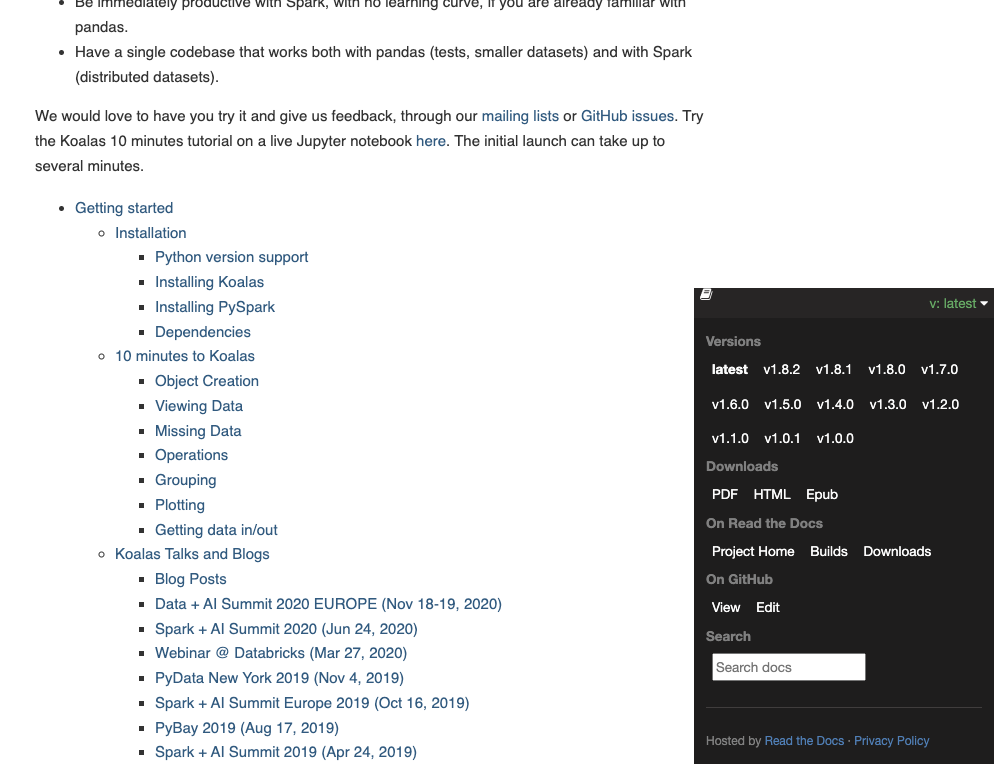

Can we have a dropdown box like https://pandas.pydata.org/docs/ https://koalas.readthedocs.io/en/latest/ You could refer to https://github.com/pandas-dev/pandas/tree/main/doc/source |

Thanks, let me do it. |

|

@gengliangwang @HyukjinKwon |

| "name": "0.7.0", | ||

| "version": "0.7.0" | ||

| } | ||

| ] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why are there no links for versions below 0.7.0? I found that there are no corresponding pyspark documents for versions below 0.7.0 in the historical directory.

https://spark.apache.org/docs

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think that is because we don't have PySpark before 0.7 :)

|

@panbingkun The latest screenshot looks good! I am wondering if we should 1) remove the |

|

the new doc looks pretty good! |

1.Okay, done. spark/python/docs/source/conf.py Lines 121 to 128 in 46580ab

and the default value for dropbox has also been set to 'release' as shown:  https://github.com/apache/spark/pull/42428/files#diff-0711e6775ff3eb4e0fb47fc40afecfe946211842573dae2c5eb872bbe53df45fR2-R5 In the test below when I set |

|

@gengliangwang @HyukjinKwon would you mind taking another look when you find some time? |

|

hi @panbingkun if we see the built doc here, the drop down is like following, is this expected? |

@gengliangwang Due to access issues with Ajax's cross origin, we need to open it locally in this way for test: |

| @@ -0,0 +1,278 @@ | |||

| [ | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I wonder if we better remove EOL releases ... but no strong opinion WDYT @srowen and @dongjoon-hyun ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, let's just start with latest versions even, as a convenience to switch. People can always find older versions manually, but we don't need to make them extra discoverable

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We have to remember to update this with new releases - I wonder, do we have another document to add that step to?

Also I think we could even just retain the latest versions in each release branch?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, I will add this logic to release-build. sh, which is what I want to do in the next PR,

Or should we continue to do it in this PR, which is more suitable? 😄

| @@ -0,0 +1,60 @@ | |||

| <div id="version-button" class="dropdown"> | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's put the license header:

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks pretty good otherwise, cc @gengliangwang and @sarutak FYI

| @@ -0,0 +1,278 @@ | |||

| [ | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, let's just start with latest versions even, as a convenience to switch. People can always find older versions manually, but we don't need to make them extra discoverable

|

Alright, let's see how it gose. Merged to master and branch-3.5. |

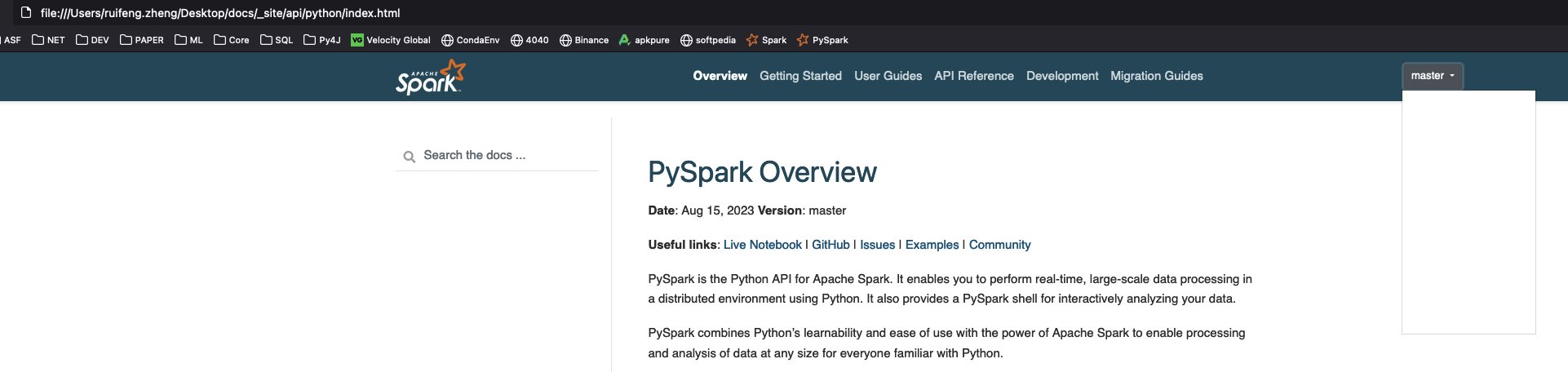

…k doc site ### What changes were proposed in this pull request? The pr aims to add Spark version drop down to the PySpark doc site. ### Why are the changes needed? Currently, PySpark documentation does not have a version dropdown. While by default we want people to land on the latest version, it will be helpful and easier for people to find docs if we have this version dropdown. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual testing. ``` cd python/docs make html ``` Closes #42428 from panbingkun/SPARK-44742. Authored-by: panbingkun <pbk1982@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 49ba244) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

|

@HyukjinKwon @panbingkun I reverted this change in branch-3.5 because it caused a doc build failure. Please assist in fixing this on the master branch. The issue can be reproduced by running the release script using the docs step in dry run mode: |

|

Yeah that's fine. Let's revert |

|

Sure, thanks for investigating this. |

…o 0.8.0 in `spark-rm` Dockerfile ### What changes were proposed in this pull request? The pr is followup #42428. ### Why are the changes needed? To fix issue: When our `pydata_sphinx_theme` version is `0.4.1`, there may be issues with not recognizing some configuration items. <img width="927" alt="image" src="https://github.com/apache/spark/assets/15246973/7ec54bb2-8c28-4863-8374-b3a5369873fc"> ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual testing. ### Was this patch authored or co-authored using generative AI tooling? No. Closes #42730 from panbingkun/SPARK-44742_FOLLOWUP. Authored-by: panbingkun <pbk1982@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

…o 0.8.0 in `spark-rm` Dockerfile ### What changes were proposed in this pull request? The pr is followup #42428. ### Why are the changes needed? To fix issue: When our `pydata_sphinx_theme` version is `0.4.1`, there may be issues with not recognizing some configuration items. <img width="927" alt="image" src="https://github.com/apache/spark/assets/15246973/7ec54bb2-8c28-4863-8374-b3a5369873fc"> ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual testing. ### Was this patch authored or co-authored using generative AI tooling? No. Closes #42730 from panbingkun/SPARK-44742_FOLLOWUP. Authored-by: panbingkun <pbk1982@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 1712515) Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

What changes were proposed in this pull request?

The pr aims to add Spark version drop down to the PySpark doc site.

Why are the changes needed?

Currently, PySpark documentation does not have a version dropdown. While by default we want people to land on the latest version, it will be helpful and easier for people to find docs if we have this version dropdown.

Does this PR introduce any user-facing change?

No.

How was this patch tested?

Manual testing.